The History of Cloud Computing

Cloud computing is the use of internet-connected servers to host software and virtual infrastructure accessed and controlled over the web or an API. The phrase “cloud computing” has only been used for a decade or so, but the history of cloud computing stretches back much further. In this article, we provide a brief history of cloud computing from the 1960s to today.

See Also: (Live Webinar) Meet ServerMania: Transform Your Server Hosting Experience

What is Cloud Computing?

In order to begin our discussions on the history of clouding in computer networks, it’s important for us to start by having cloud computing explained to you. When we think about a cloud computer today, we’re talking about servers in a remote data center that perform functions for remote clients.

While this can be more complex with clusters of servers forming a “cloud”, the term cloud computing can refer to any remote server – even a single virtual machine or dedicated server. This version of Cloud Hosting is in contrast to an on-premise server that is located physically near you.

The Origins of Cloud History – 1960s: Mainframes And Terminals

When we start to think about when was the cloud invented and explore cloud computing history, we have to venture back almost 70 years.

In the 1950s and 1960s, computers were enormous, expensive, and only a reality for corporations and large organizations like universities. This was the age of the mainframe. Each mainframe was a multiuser computer — massively powerful by the standards of the time — which human operators interacted with via a terminal.

Throughout this period and into the 1970s, the way operators interacted with computers evolved from punch cards, through teletype printers, to primitive screen terminals that were the ancestors of today’s command line terminals.

Mainframe clients had almost no computing power: they were simply interfaces for the mainframe computer which could be located a long way from the operator, connected over a dedicated network.

You can see why this model of computing is considered a direct ancestor of cloud computing. Just like the cloud, the bulk of the work is done on physical “servers” that the user doesn’t directly interact with and that are not located on the local network. If you’re wondering when did cloud computing start, these early mainframes were the origin.

See Also:ServerMania Cloud Backup

The Birth Of The Internet

At the tail end of the 1960s, DARPA was hard at work on ARPANET — a packet switching network that was the proving ground for the principles and technologies that power web services on the internet today.

The internet as we know it was still a decade and more the future. There was no web and no email, but as ARPANET and its successors developed through the 1970s, joining together institutions and corporations that used mainframes and minicomputers, still connected to users via terminals, we begin to see something that almost bears a resemblance to modern cloud computing.

The bandwidth available in this period was a small fraction of those we have available on the most meagre cellphone contracts, but it was enough to power a busy network of scientists, researchers, and corporations who were performing advanced research using these new networks.

1970s: Virtual Machines

Today’s elastic compute cloud services wouldn’t be possible without the virtualization that allows us to run many virtual servers on a physical server. The first glimmerings of virtualization were developed at IBM in the 1970s. Using the VM operating system, mainframe owners could run virtual machines, much as we do today.

Interestingly, although it was first released in 1972, 45 years ago, the VM operating system is still in use today by companies with mainframes. It’s often used to run virtual machines with Linux or a commercial Unix variant.

As you might imagine, virtualization has come a long way in the almost five decades since it was introduced. Today, it’s trivially easy to launch multiple virtual servers on our laptops, and enterprise-grade virtualization tools like VMWare, Xen, and Microsoft Hyper-V power much of the web.

1990s: The Web And Software-As-A-Service

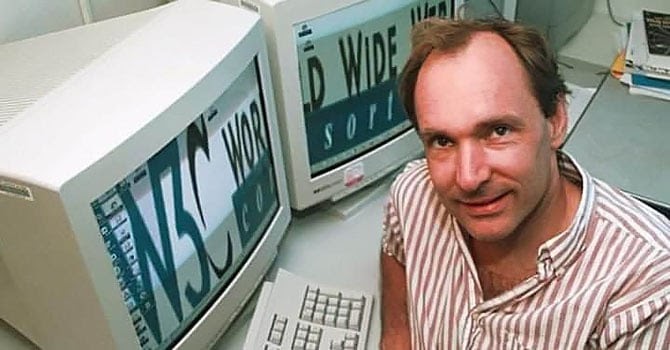

The World Wide Web was invented in 1989 at CERN by Tim Berners-Lee, an English researcher. The first web browser was released a couple of years later.

The web was — and is — a technology for linking hypertext documents and other resources. The web rides atop the internet, and has been called the internet’s killer application. The development of the web led directly to the massive expansion of the internet, huge investment in networking technology, and a wave of social changes we’re still riding today.

In the early years of the web, the available bandwidth was still meagre, but as businesses and consumers embraced the web, the infrastructure hosting industry was born. Many larger companies at this time used on-premises data centers, but throughout the late 90s and early 2000s, the data center industry boomed, and shared hosting and dedicated servers became the hosting platforms of choice.

It was also in this period that the first Software-as-a-Service applications were released. One of the first big successes in the SaaS space was Salesforce, which used the improved bandwidth and hosting technology to provide enterprise-grade CRM software accessed with a web browser.

2000s: Infrastructure-as-a-Service And The Modern Cloud

The first recognizable Infrastructure-as-a-Service platforms became publicly available in 2006. Offering on-demand compute and storage, Amazon Web Services and its competitors radically changed the way businesses pay for, think about, and manage their infrastructure, as well as powering rapid innovation in the startup space.

Several years before the first Infrastructure-as-a-Service platforms were released, ServerMania was founded to provide inexpensive infrastructure hosting to small and medium businesses. Over the next decade and a half, ServerMania embraced virtualization and the cloud.

Public Clouds

When we say “cloud” in casual conversation, we usually mean public cloud. Public cloud computing services use virtualization and modern network technology to provide on-demand scalable compute and storage. Many of the largest infrastructure users in the world depend on cloud infrastructure, as do hundreds of thousands of smaller businesses.

Private clouds

In response to the demands of enterprise infrastructure hosting clients for privacy and control, IT service providers began offering private clouds, which have many of the same benefits as the public cloud. The major difference is that one organization owns and controls all the cloud servers. Custom private clouds allow companies to leverage the benefits of virtualization in a completely secure and private environment which can be tailored to the specific requirements of their workloads.

Hybrid clouds

In the early days of public and private clouds, pundits pitted the two against each other, wondering which would achieve dominance. In reality, public and private clouds are complementary technologies, and many enterprise infrastructure users find a place for both. Thus developed hybrid clouds, which integrate public and private cloud platforms, as well as bare metal dedicated servers, which still have a major role to play.

2020: High-Availability Cloud

When the cloud first developed, the deal between cloud providers and their clients was this: we provide the infrastructure, it’s up to you to take that infrastructure and build a reliable and consistently available cloud computing platform. Most cloud vendors haven’t evolved much beyond that approach.

Another approach was to deliver a robust cloud computing platform like the elastic compute cloud EC2, but to hide support behind paywalls and a complex control panel. At ServerMania, we don’t believe that a complete cloud solution needs to be complex.

ServerMania’s Public Cloud platform goes a step beyond traditional cloud vendors: our cloud server platform is fully redundant and highly available. We take care of a key aspect of cloud management so that you can focus on building the applications and services that will help your business flourish.

Want to learn more about cloud applications and cloud computing? Check out our blog here!

Was this page helpful?