Low Latency Servers For Faster Performance

“Low-latency” means minimal round-trip time. For workloads like HFT, competitive gaming, and video conferencing, even a few milliseconds can be critical.

Latency is the delay that impacts user experience, unlike anything else, and businesses desperately seek minimal latency in their infrastructure.

ServerMania has built its reputation on delivering exactly that. With our premium low-latency server infrastructure designed for mission-critical workloads, clients trust ServerMania to achieve low latency and sustain it. Whether it’s dedicated hosting, power-intensive workloads supported by GPU server hosting, or seamless scalability with unmetered bandwidth servers, the focus stays on performance.

By optimizing our network and placing infrastructure closer to users, ServerMania powers latency-sensitive industries where milliseconds matter.

In this quick guide, we’ll walk you through low-latency hardware and why it matters so much.

Why is Latency Important

In almost any computer network, internet speed can’t guarantee excellent performance for latency-sensitive applications. It’s more about how quickly the data packets travel from one device to another.

In short, the latency experienced by the end users determines how fast the real-time access feels, or is compromised by lag time, which delays the response time.

Low latency = fast responsiveness

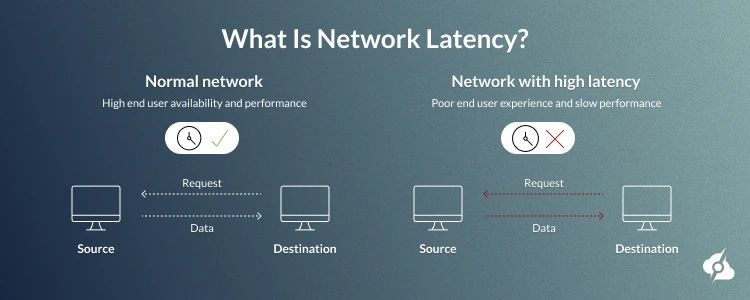

What is Latency (Ping)

In its foundation, latency is the delay between receiving and sending data in your network connection and is measured in milliseconds. These milliseconds report the back-and-forth journey of these data packets and reflect the time it takes for a round trip.

High network latency means delay. It could appear even on a high-end networking and occurs due to network congestion, signal degradation, or even the transmission medium (like copper cable or wireless transmission). In the real world, every single bit of delay can directly impact system performance and application performance, ultimately leading to a poor user experience.

Low Latency Vs. High Latency

Understanding the difference between low latency and high latency is critical because it directly shapes application code, network communication, and responsiveness in such applications as virtual reality, VoIP calls, or financial trading.

| Low Latency | High Latency | |

|---|---|---|

| Response Times | Feels instant, with minimal delay. | Noticeably longer delays or pauses |

| User Inputs | Immediate reaction in online gaming or augmented reality. | Delayed actions leading to motion sickness or frustration |

| Network Speed | Optimized network switches and network devices ensure smooth data transmission. | Network problems and bottlenecks negatively affect latency. |

| Real Time Communication | Works flawlessly in video conferencing and VoIP calls. | Minor delays disrupt conversations; such delays harm the flow. |

| Throughput Measures | High system performance supports high volumes of data packets. | Limited capacity, weaker throughput measures. |

| Applications | Essential for low-latency streaming, real-time communication, and high-frequency trading servers. | Acceptable only in non-critical tasks or bulk transfers. |

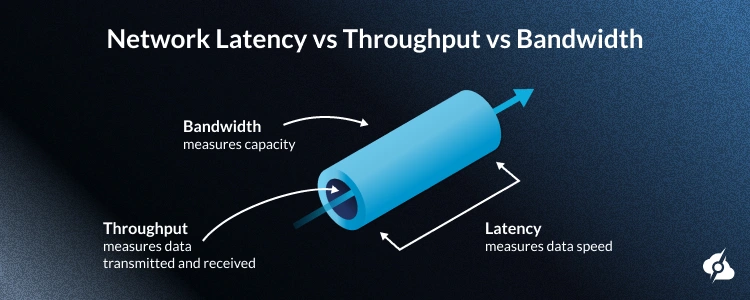

Latency Vs. Bandwidth Vs. Throughput

If you’re in the IT fields, you’ve probably heard the terms latency, bandwidth, and throughput, but if you have not invested in unwrapping each of them yet, you probably think of them as the same thing. While they share many similarities, they describe different aspects of the performance of the network.

- Latency – Latency is all about time delay, and refers to the time it takes from the moment you send a networking data packet to the moment you receive a response.

- Bandwidth – The bandwidth refers to the capacity and describes the size of the transmission medium and how much more bandwidth or higher bandwidth can be allocated.

- Throughput – The throughput is the actual reality check and measures how much data was successfully transmitted, factoring in wireless connections, signal degradation, and more.

How To Measure Latency

There are quite a few ways to measure latency on your infrastructure, ranging from a DIY home internet test to enterprise-grade network monitoring. The main thing to remember here is that the closer to zero the latency appears, the better, as this means less delay.

Here are some traditional ways of measuring latency:

| How It Works | Purpose / Insight | |

|---|---|---|

| Ping (ICMP command) | Sends a small data packet to another device and measures the round-trip time. | Provides a simple snapshot of network latency. |

| Traceroute | Maps the path of a packet through each network node. | Reveals where delays occur along the route. |

| Application-level monitoring | Uses built-in or third-party tools to track response times within an app or service. | Shows latency inside specific applications. |

| Network monitoring solutions | Tools like SNMP or packet analyzers monitor overall network traffic. | Detects network congestion and potential bottlenecks. |

| Real User Monitoring (RUM) | Collects timing data from actual user interactions. | Reflects the latency experienced in real-world scenarios. |

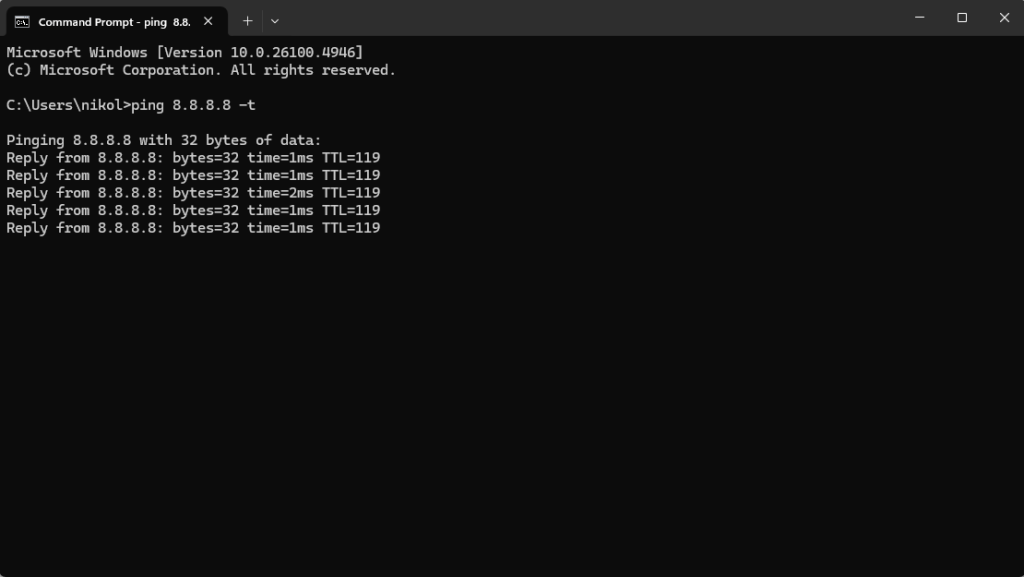

Measuring Latency on Home Broadband

If you want to test your latency straightforwardly at home, whether you’re running a Windows OS or macOS, there is a quick way to do it. You’ll need to use the “CMD” (Command Prompt) on Windows or the Terminal for macOS and ping some destination.

Here’s how it works:

- On Windows: If you’re on a Windows PC, open the CMD as administrator, type “ping 8.8.8.8 -t” and hit Enter to start sending packets and receiving responses.

- On macOS: If you’re on macOS, open the Terminal and type “ping -c 100 8.8.8.8” to start sending packets and receiving responses.

Essentially, what we’re doing here is continuously sending network packets to Google’s DNS (8.8.8.8) and measuring the time it takes for us to receive a response.

As shown in the image above, it takes 1 to 2 milliseconds (ms) to send and receive a response, which is considered low latency. Latency starts to take a toll when it’s over 150ms, especially in video gaming and other ping-sensitive applications.

What is Low Latency in a Server

Until now, we’ve been unwrapping what latency is in general, but in a server configuration, latency takes many different shapes, as in hardware components.

Here are the major types of latency in servers:

Network Latency (Physical Distance and Routing)

We already know what network latency is. In short, this is the time it takes for the network data to go to your destination and return to you. Anything from long distance, multiple hops, or bad routing can affect your network ping and cause high latency during latency-dependent workflows.

Processing Latency (CPU and App Response Time)

The processing latency is an entirely different thing. This occurs when a server is struggling to keep up with the user inputs and can’t execute application code fast enough. This type of high latency is solely hardware-based and has nothing to do with the network and broadband speed.

Storage Latency (Disk I/O and Database Queries)

The storage latency is again, hardware-related, but not connected to your processing power, rather than with the storage solution like HDD, SSD, or NVME. Storage latency happens when reading and writing of data happen slower than expected, impacting performance.

Low Latency Servers in Real World Use Cases

Low-latency machines are crucial for businesses that rely on real-time access, fast data transmission, and no delays. From gaming platforms to financial services, having a low-latency network ensures your smooth operations, responsive applications, and a superior user experience.

Multiplayer Gaming

For online gaming and low-latency game servers, every millisecond counts. The low-latency machines reduce lag time and improve response times, giving players a competitive edge. ServerMania’s Game Servers are optimized for low-latency network performance and no delay, perfect for multiplayer games and e-sports platforms.

Mining & Rendering

Businesses running AI workloads, virtual reality, or data-intensive applications benefit from GPU Server Hosting. With ultra-low latency and powerful processing capabilities, these servers support real-time communication, sensor data analysis, and complex application performance requirements.

Learn more about GPU Server Hosting.

Streaming & CDNs

Platforms delivering low-latency streaming or managing content delivery networks (CDNs) require servers that handle high volumes of data without slowing down.

ServerMania’s Unmetered Bandwidth Servers ensure more bandwidth, minimal latency, and consistent network speed, ideal for video conferencing, content delivery, and quick access.

Causes of Server Latency and How to Reduce Latency

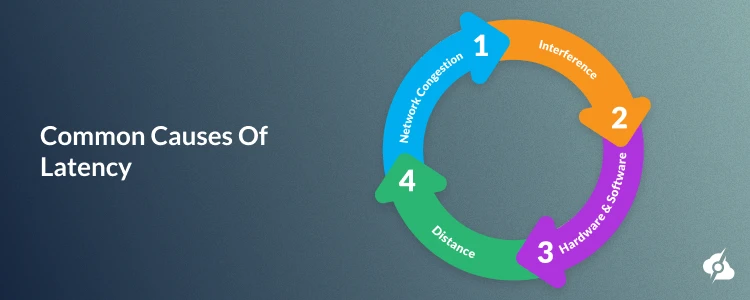

Server latency of any type can impact user experience in almost any business, no matter how powerful your server or how fast your network provider is. Understanding the causes of server latency is critical, so we’ve prepared a quick breakdown at your disposal.

| Source | Explanation: | Optimization: |

|---|---|---|

| Geographic Distance | Data has to physically travel between the server and user, constrained by the speed of light. | Deploy servers closer to key user bases; use edge locations or CDN nodes. |

| Congestion & Routing | Data passes through multiple network hops and can be delayed by overloaded routes. | Optimize routing, implement direct peering with major ISPs, and monitor network paths. |

| Hardware Limitations | CPU, RAM, or other server components may not process requests quickly enough under load. | Upgrade to high-frequency CPUs, increase RAM, and utilize efficient server architectures. |

| Software Inefficiencies | Poorly optimized applications, inefficient code, or unnecessary processing steps. | Optimize code, implement application-level caching, and reduce unnecessary processing. |

| Database & Storage Performance | Slow disk I/O or inefficient queries can delay data retrieval. | Use NVMe storage, in-memory databases, optimize queries, and implement caching layers. |

Hence, getting to know the primary sources that affect latency is the fundamental step to achieving high performance with your server infrastructure. When deploying edge computing, you also need to optimize your server processing time by utilizing network monitoring tools to maintain low latency.

Hardware Specifications for Ultra-Low Latency Performance

Achieving ultra-low latency requires careful selection of hardware components designed to handle high-speed data processing with minimal delays. Every part of the server, from CPU to storage and network interfaces, contributes to the overall responsiveness of your system.

For apps like high-frequency financial trading, real-time analytics, and edge computing, optimizing hardware is essential to reduce latency and maintain consistent performance.

High-Frequency CPUs for Minimal Delay

Modern CPUs, such as Intel Xeon W-series or AMD EPYC high-frequency SKUs, deliver base clocks above 3.5 GHz. High core frequencies minimize server processing time, ensuring rapid execution of instructions. Selecting CPUs with low-latency cache access is crucial for workloads that demand microsecond-level responsiveness.

Memory Configuration: Speed vs Capacity

Memory latency is just as critical as capacity. DDR5 modules with minimal CAS latency (<60 ns) enable faster access to data, reducing bottlenecks in low-latency networks. Optimizing memory channels and dual-ranked configurations further helps reduce latency for memory-intensive applications.

Storage Solutions: NVMe and RAM Disks

Gen4/Gen5 NVMe drives achieve IOPS above one million with sub-10 μs latency. For ultra-demanding workloads, RAM disks can temporarily store hot data for near-instant access.

This combination ensures that storage does not become a limiting factor and reduces latency when deploying programmable network platforms or computing solutions.

Network Interface Cards and Hardware

High-speed NICs like Mellanox/NVIDIA ConnectX with RDMA and support for kernel bypass reduce network latency to under 1 μs. Hardware timestamps and precision time protocol allow accurate synchronization for real-time data transmission. In some cases, FPGA-based acceleration further enhances throughput while maintaining ultra-low latency.

Deploy Ultra-Low Latency Infrastructure with ServerMania

Here at ServerMania, we deliver ultra-low latency solutions for high-demand applications through some of our stand-out hosting solutions. Some of them include dedicated hosting, cloud hosting, unmetered servers, and other specialized offers such as Game Servers, GPU Servers, Storage Servers, and more.

With strategic Canadian locations and a global data center presence, ServerMania helps clients achieve low latency for users everywhere.

Our 24/7 expert support and performance guarantees make deploying programmable network platforms and edge computing systems simple and reliable.

💬 Need a low-latency network server? Book a free consultation with an expert today!

Was this page helpful?