CPU Cores Vs Threads: Complete Comparison Guide

When it comes to server performance, a few questions and topics can quickly spark confusion and ferocious debate, such as the difference between CPU cores and threads.

For developers, IT professionals, and DevOps engineers, managing infrastructure and understanding what’s happening in the central processing unit isn’t optional; it’s essential.

At ServerMania, we specialize in high-performance Dedicated Servers and Cloud Servers engineered for the most demanding workloads for over two decades. Our infrastructure is built to fully leverage the power of CPU cores and threads, so your applications would run faster, scale smarter, and handle multiple tasks simultaneously.

In this guide, we’ll walk you through single-core vs multi-core CPU setups, the real-world impact of logical cores, and how threads simultaneously interact with physical processing units to deliver (or bottleneck) computing performance.

We’re talking practical insight here, not just textbook definitions.

What is a CPU? – CPU Cores Explained

We all know that the CPU, or central processing unit, is the foundation of every computer system, the control center for all other hardware components. The CPU is responsible for anything from booting up an operating system to running complex code, performing millions or billions of calculations per second.

In server environments, system performance, reliability, and scalability matter most, and the CPU’s ability to handle multiple tasks simultaneously becomes critical. That’s where the structure of the processor, especially its cores and threads, starts to matter in a big way.

Single Core Processor

A single-core CPU contains just one physical core, capable of executing one task at a time. This does not mean that you can only run one app at a time, but everything you do is sent for calculations to that one real core. Older systems relied on this model, but it struggles with multiple threads or modern multitasking demands.

Even nowadays, some single-threaded applications still benefit more from clock speed than count.

Multi-Core Processor

A multi-core CPU includes multiple CPU cores, each able to handle its own set of tasks. This will enable true parallel processing and provide better performance. In server workloads, more CPU cores allow for smoother handling of multiple threads, improving efficiency and responsiveness.

Understanding CPU Cores and Threads

If we want to make sense and understand the foundation of CPU performance, we need to understand how CPU cores and threads work together inside the processor. In short, these two components are the primary elements that define how many instructions your system can execute simultaneously.

What is a CPU Core?

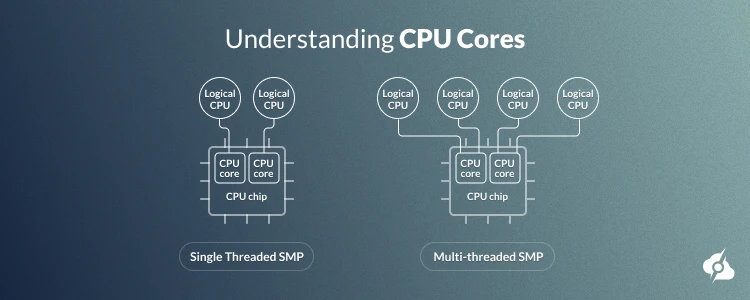

The CPU core is a physical piece inside the processor that is independently working and handling the multiple tasks thrown at it. You can picture a server core as a mini-processor inside the big processor. Hence, the more mini processors, the more processing power.

Each of these mini-processors (cores) can handle a single thread of instructions. Hence, any dual-core processor has two physical processing units and can run two completely separate tasks simultaneously.

In the most demanding applications, the ability to separate the workload between cores is critical and is what determines your processor capabilities.

What is a CPU Thread?

Unlike the physical component (core), a CPU thread is the actual instruction that the core executes. In most of the modern processors, threads are called “Simultaneous Multithreading“ (SMT), or “Hyper-Threading“, and for example, one core can handle two threads at a time.

This means that a single core can handle more tasks with the help of these virtual cores (threads), without the need for additional physical processing units.

This is helpful with applications that require a lot of tasks to be run simultaneously, and threads allow your CPU cores to handle a lot more with a lot less. That’ll make more sense a bit further in the guide.

Difference Between CPU Cores and Threads

The primary difference between CPU cores and threads is in their design. While cores are actual hardware pieces that you can see and touch, threads are just virtual instructions. They help your CPU cores work more efficiently and do tasks simultaneously, ultimately boosting computing resources without additional cores.

However, threads can’t replace cores, and at the root of everything, more cores typically mean more power for multitasking and running applications, no matter how many threads there are. More threads just help improve your server throughput by keeping those cores busy, for various tasks and workloads.

In short, cores do the heavy lifting, and threads make sure nothing sits idle.

The Advantages of More CPU Cores

As we’ve already learned, more cores mean more power. So, let’s go over some of the advantages of going for a multi-core processor instead of choosing more threads.

- More Tasks & Raw Power: Multi-core processors mean more raw power, which reduces bottlenecks in multitasking or server-side operations.

- Better Multi-Thread Output: The number of cores is crucial for applications and workloads such as video editing and compiling, where raw power is everything.

- Reduces the Core Utilization: The number of cores your processor is equipped with, the less load is on each of them, which extends the processor’s lifespan and stabilizes thermal output.

- Better Workload Distribution: With multiple cores in the CPU, distribution across containers, services, or virtual machines will be much better.

- Supports High Traffic Loads: Servers with multi-core processors can handle more traffic on web servers, databases, and application backends without slowing down.

In a nutshell, more cores are better than more threads. However, more cores mean a larger investment in server hardware, which is not necessary in many workloads in this industry.

The Advantages of More CPU Threads

With many workloads, you may not require physical cores; instead, virtual threads can help you handle your workload with ease, without having to invest in physical infrastructure. Let’s break down the main advantages of running a system relying on threads, rather than physical CPU cores:

- Each Core Works Harder: The virtual threads help your processors’ real cores work harder and handle more tasks simultaneously instead of one by one.

- Improved Performance: With more CPU threads, you’ll have improved performance in heavily threaded environments, such as virtualization or modern server OS tasks.

- Processors Stay Busy: The processor will remain fully occupied during loads, reducing idle time during I/O waits or lightweight operations.

- Better Responsiveness: Your CPU will respond faster in systems handling lots of small, concurrent requests, such as APIs or chat services.

- Better Resource Usage: The resource distribution will be much better with threads, which will balance the threads across available cores more efficiently.

Note: For some workloads, core count is not everything, as other components such as GPU and RAM play a vital role in how your server’s overall performance.

Multithreading Explained

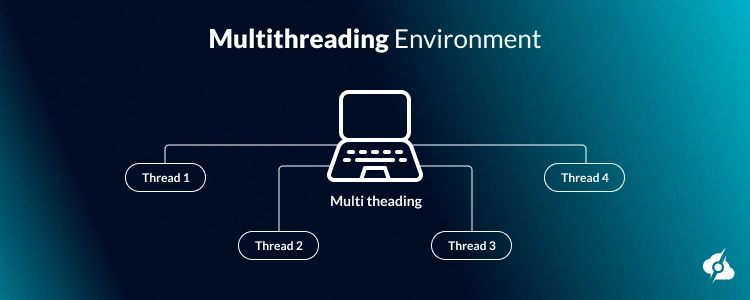

Multithreading is a modern approach by various CPUs to boost their efficiency and performance by allowing each real core to handle multiple threads. In simple terms, a single process is divided into separate tasks (threads), allowing your CPU to execute them concurrently.

Each thread represents a separate sequence of instructions, and by parallel processing across either a single core or multiple cores, the system executes instructions faster.

Most importantly, in server environments, this multithreading approach improves the handling of high-throughput workloads. This is extra helpful, since instead of forcing one single CPU core to handle tasks one by one, it allows multiple threads can be processed simultaneously.

This provides better performance, especially when dealing with independent tasks that don’t rely heavily on shared system resources.

Multithreading Best Use Cases:

- Handling multiple user requests on a web server

- Background tasks in desktop/mobile applications

- Virtual machines and container orchestration

- Game engines processing AI, physics, or rendering

- Server-side APIs managing large numbers of calls

Hyperthreading Explained

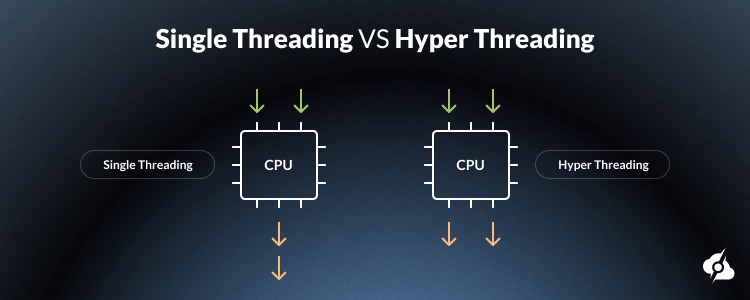

Similarly, but not exactly like Multithreading, Hyperthreading is Intel CPUs’ own technology to separate one core into two cores (virtually). Simultaneous multithreading (SMT) allows each of the real cores to appear as two logical cores, where two threads share a single physical CPU core, executing instructions faster.

Ultimately, this is resource sharing where one core handles two threads simultaneously. For example, a quad-core processor appears as an eight-core count and handles twice as many tasks on the same core.

This can boost processing power in workloads where one thread would leave parts of the core idle, like waiting for data from memory. By filling in those gaps with a second thread, hyperthreading greatly enhances efficiency without requiring more physical CPU cores.

Read Also: Intel vs AMD – Which Is The Better Option In 2025?

Hyperthreading Best Use Cases:

- Virtualization and cloud computing with many processes

- Multitasking environments with frequent context switching

- Background services that don’t fully saturate the real cores

- Running analytics, logs, or system monitoring tools alongside

- Lightweight microservices or containers on the same server

Multithreading Vs Multicore – (More Cores or Threads)

When it comes to selecting a CPU for your server, you’re always going to be faced with the dilemma of whether to choose more real cores or virtual threads.

Selecting between more CPU cores or more threads depends entirely on what you’re running and how that workload interacts with your hardware. Both increase your ability to process data simultaneously, but in very different ways, so you have to choose between physical processing power and optimization.

When You Need More Cores:

If your workload involves heavy, parallel execution or multiple large applications running simultaneously, you’ll benefit from more physical CPU cores. Some of these workloads include:

- Video editing, 3D rendering, and animation pipelines

- High-traffic database servers and/or analytics engines

- Virtual machines & container clusters with isolated apps

- Scientific simulations and batch processing workloads

- Compiling codebases with build tools like GCC or Clang

Note: Performing calculations on more cores leads to higher power consumption of your CPU.

When You Need More Threads:

Threads are useful when your workload isn’t maxing out a core, but still has room to squeeze in additional instructions during idle CPU cycles. Some of these workloads include:

- Lightweight microservices running in the background

- Web servers handling many small concurrent requests

- Real-time monitoring, log processing, or chat applications

- Multi-user remote desktops or shared SaaS platforms

- Background processes and cron jobs on cloud servers

Note: Running multiple threads and dividing tasks leads to less power consumption than more cores!

How Many CPU Cores Should You Choose – Let ServerMania Help

There’s no straightforward answer!

When it comes to choosing a CPU, whether it’s core count or thread count, you need to carefully evaluate the significance of your tasks and whether you need raw power or optimization.

Besides core count, other components are also critical for your server, whether you’re running single-threaded applications or demanding applications. If you want to make the most of the same resources of your available cores and boost your overall performance or execute instructions faster, we will help.

💬 Chat with a ServerMania Expert Today!

Not sure if your workload needs more cores, more threads, or both? Our engineers are here to help you choose the perfect server for your hosting requirements.

Was this page helpful?